Combining Behaviour Trees With Reinforcement Learning

2018

For this project I created a C# library that contains the standard nodes used in Behaviour Trees, as well as logic for a new node type – RLSelector. This node uses Q-Learning to choose which action from its children to execute for the NPC’s current state. It is also able to dynamically add or remove child nodes by selecting from an action pool. This lets it build itself and reject actions that don’t help for the current situation.

I plugged this library into a Unity project to create a JRPG demo scenario. The NPC patrols to various enemies with different elemental resistances and uses an RLSelector node to learn which attacks to use.

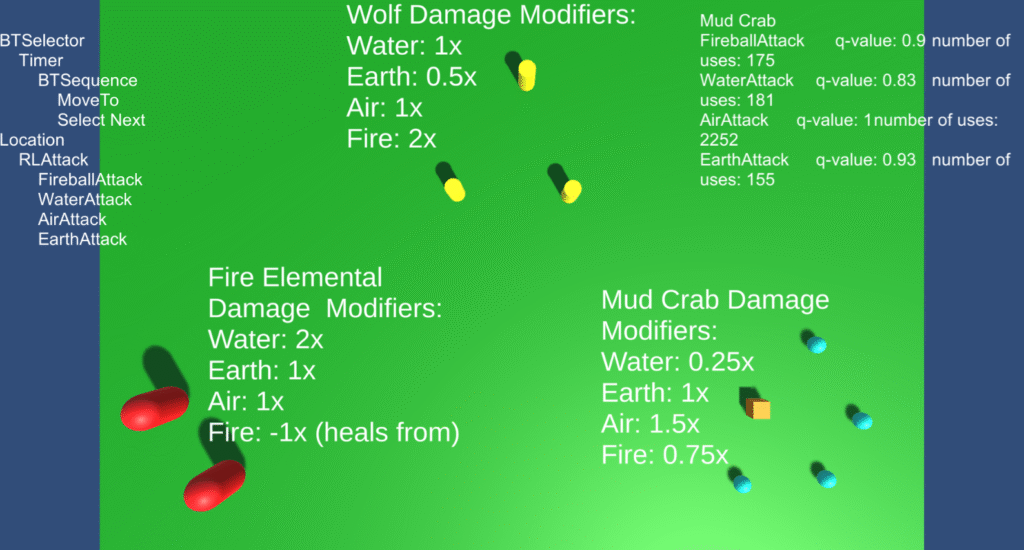

This is the demo scene I set up to showcase a reinforcement learning NPC. The behaviour tree is made up of a simple patrol behaviour, with a RLSelector node used to select which attacks to use.

Each enemy has different resistances which the NPC learns to exploit based on how much damage it’s elemental attacks do. Over time the NPC favours attacks that deal more damage.

The Mudcrab enemy is weakest to air attacks so the NPC learns to attack with that element more often.

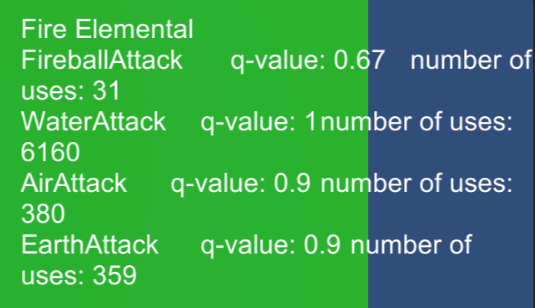

The q-value (top-right) is how “good” the action is relative to the current “best” action. q = 1 means this is currently the perceived best action to do.

I added the ability for the reinforcement learning node to reject especially bad actions after enough attempts. In this example, attacking a fire elemental with a fire attack heals it, giving a negative reward value. The NPC learns to stop using fire attacks against the fire elemental and focuses on water attacks which deal the most damage.